New guidance draws a line between behind-the-scenes tools and content players see.

Valve has quietly updated its AI disclosure guidance on Steam, tightening up the language around what developers do, and what they do not need to declare. The short version is this. Using AI-powered tools to speed up development is not the issue. Shipping a game that includes generative AI content still is.

The change, first spotted and posted on LinkedIn by Simon Carless, founder of GameDiscoverCo, clarifies that studios using AI-assisted tools such as “code helpers” do not need to flag this on their Steam store page, as long as no generative AI content ends up in the final product, which includes things like artwork, music, narrative text, localisation, or other assets that players actually see or hear.

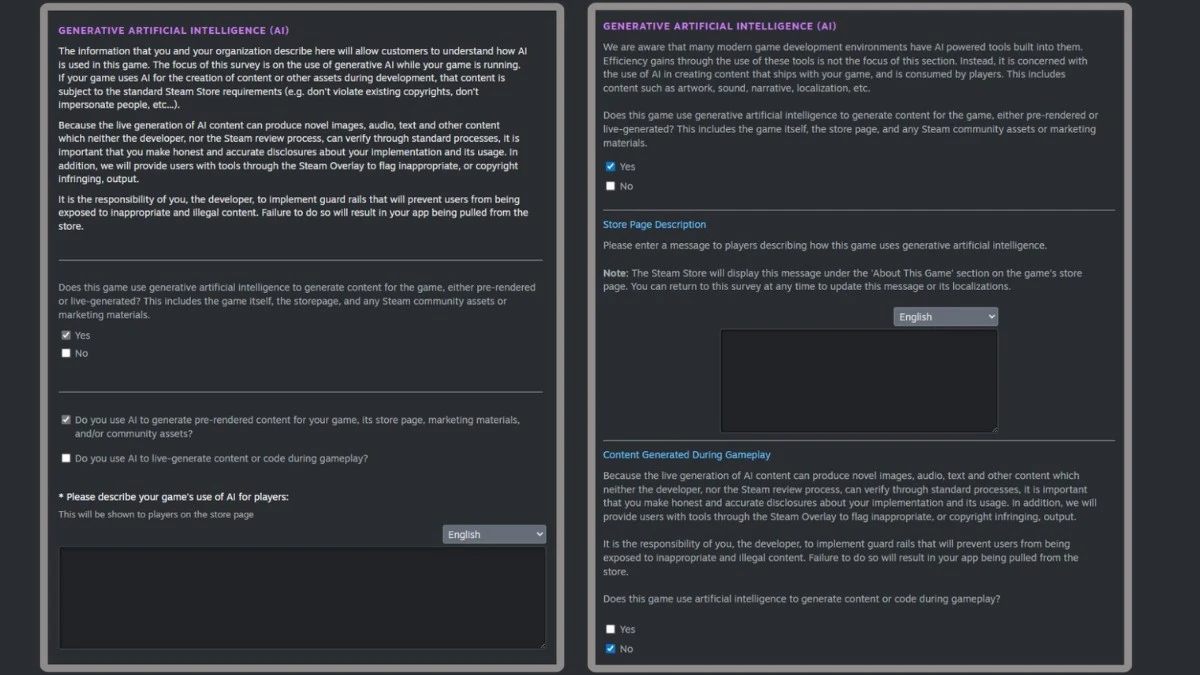

Valve spells this out directly on its updated developer disclosure page. “We are aware that many modern games development environments have AI-powered tools built into them,” the page now reads. “Efficiency gains through the use of these tools is not the focus of this section. Instead, it is concerned with the use of AI in creating content that ships with your game, and is consumed by players. This includes content such as artwork, sound narrative, localisation, etc.”

Steam still wants transparency on AI-generated content that reaches players

Despite the softer tone around internal tooling, Valve is not backing away from disclosure entirely. Developers publishing their games on Steam must still answer a clear yes or no to the question: “Does this game use generative artificial intelligence to generate content for the game, either pre-rendered or live-generated? This includes the game itself, the store page, and any Steam community assets or marketing materials.”

This only means that if AI helps a team write cleaner code or optimise workflows behind the scenes, Valve does not consider that something players need to know about. If AI is involved in creating content that ships with the game or its marketing, players are expected to be informed.

Reaction from developers and players has been mostly positive, like LinkedIn user Sabin VanderLinden, who described the change as “a really thoughtful middle ground that actually makes sense for developers,” adding that separating internal tools from shipped content shows that Valve understands how teams actually work, while still putting players first.

Others, like user Samuel Cohen also pointed out that “In a moment when even Unreal Engine has its own AI assistant chat bot, this makes a lot of sense.”

While the feedback is positive, many others want to have further clarifications around the policy. User Jon Hanson was among the few who asked for more clarifications. “Where does the line get drawn on the marketing side is my question. Everyone's use cases on that side goes to creative assets,” he said. “But what if you use LLM to scrape reviews for sentiment, do competitive analysis, customer segmentation, etc. Does that count? Or is it viewed more as "code helpers" on the dev side.” However, others felt that the guidelines were already clear enough.

This clarification comes at a time when Steam continues to grow at a fast pace. Valve’s platform recently passed 42 million concurrent users, setting another all-time record, and with that scale comes increasing pressure to balance developer freedom with player trust.

The update also lands against a wider industry debate. Late last year, Epic Games CEO Tim Sweeney took aim at disclosure rules, arguing that “Steam and all digital marketplaces need to drop the ‘Made with AI’ label. It doesn’t matter anymore.”